With the current enthusiasm for AI and especially LLM (Large Language Model), I was seduced by the idea of directing one of these models on an unplanned platform for this: a Raspberry Pi.

Choice of the Raspberry Pi

I chose the Raspberry Pi 5 model, the most powerful so far with 8 Gb of RAM. This is for two reasons: the operation of an LLM is obviously very codophical in CPU and memory resources, and on the other hand the implementation I chose only works in the x86 architecture and in Arm64. Therefore, you need to have a Raspberry Pi supporting a 64-bit operating system. A Pi 4 might have been enough, but I think its memory size is too limited.

For this experiment, I had an underlying goal: to check if a language model is reasonably usable in a relatively small system (provided you can qualify a Raspberry Pi 5 with 8 GB of RAM as a restricted environment) with a low-speed Internet connection, or even non-existent. The aim is to integrate an LLM into integrated Linux-based systemsLinux, for client projects or training sessions.

LLM election

We set the frame of this test: it is about executing the language model locally in the Raspberry Pi, not consulting a server in the cloud.

There are many language models available more or less freely. Some require a subscription and obtaining a key from Google (Google's Gemini, Claude d'Anthropic, OpenAI GPT, etc.).

One solution that best suits my expectations is the use of Ollama, a free license tool (MIT), capable of loading and running LLMs on Linux, Windows and MacOS.

Installation and testing

Raspberry Pi OS

I installed the latest version of Raspberry Pi OS (04/07/2024) in 64-bit version based on a Debian 12 distribution.

Ollama

Ollama's installation Ollamais extremely simple: a script to download and run.

The Ollama site suggests using the following command that downloads the script and sends it directly as input to a shell.

$ curl -fsSL https://ollama.com/install.sh | sh

I'd rather look at the content of the script before I ran it, so I left by the way:

cpb@raspberrypi:~ $ curl https://ollama.ai/install.sh > install.sh [...] cpb@raspberrypi:~ $ chmod +x install.sh

After quickly looking at the content of the script, I install it as follows:

cpb@raspberrypi:~ $ ./install.sh >>> Downloading ollama... ####################################################################################################################################################> >>> Installing ollama to /usr/local/bin... >>> Creating ollama user... >>> Adding ollama user to render group... >>> Adding ollama user to video group... >>> Adding current user to ollama group... >>> Creating ollama systemd service... >>> Enabling and starting ollama service... Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service. >>> The Ollama API is now available at 127.0.0.1:11434. >>> Install complete. Run "ollama" from the command line. WARNING: No NVIDIA/AMD GPU detected. Ollama will run in CPU-only mode.

The script warns us that it has not found graphic processors of Nvidia or Amd (GPUs). No wonder a Raspberry Pi. And as a result, he will do all the treatment directly in the CPU hearts.

Models

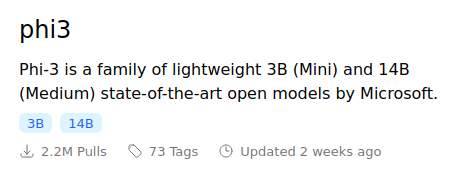

The Ollama Models page contains about 100 language models available. Most are available in two or three versions depending on the size of the neural network used. Let's take the example of the model phi3::

Both versions 3B(3 trillion, 3 billion) and 14B(14 billion, 14 billion) corresponding to the number of "parameters" of the model, i.e. approximately the number of connections between the neurons of the network. The higher this number, the greater the LLM's chances. But by increasing the number of parameters, the memory space needed to accommodate increases and the computational resources (CPU power, energy consumption) essential to travel also grow.

With a Raspberry Pi with 8GB of RAM, it doesn't make sense to expect to run models of more than 7 billion parameters, but still leaves a good selection of models.

Phi3

The first model I'm testing is the Phi-3, available under an MIT license. It exists in two versions: 3.8 billion and 14 billion. This is, of course, the first version I'm going to use. To install it, the names can be used. phi3:3.8bor or phi3:mini::

cpb@raspberrypi:~ $ ollama pull phi3:mini pulling manifest pulling manifest pulling 3e38718d00bb... 100% ▕█████████████████████████████████████████████████████▏ 2.2 GB > pulling fa8235e5b48f... 100% ▕█████████████████████████████████████████████████████▏ 1.1 KB > pulling 542b217f179c... 100% ▕█████████████████████████████████████████████████████▏ 148 B > pulling 8dde1baf1db0... 100% ▕█████████████████████████████████████████████████████▏ 78 B > pulling ed7ab7698fdd... 100% ▕█████████████████████████████████████████████████████▏ 483 B > verifying sha256 digest writing manifest removing any unused layers success

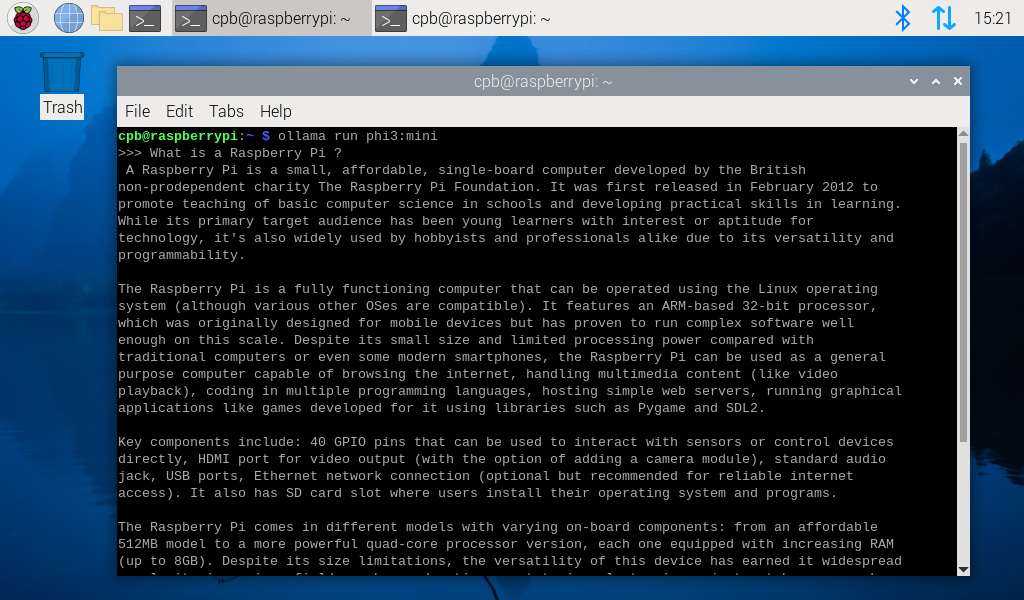

After two minutes, the model is recorded on the Raspberry Pi SD card, ready for use. Let's try it with a simple question:

cpb@raspberrypi:~ $ ollama run phi3:mini >>> Send a message (/? for help)

Ollama took about ten seconds to load and initialize the model, before inviting us to ask him a question.

>>> What is a raspberry pi? A Raspberry Pi is a small, affordable single-board computer developed by the Raspberry Pi Foundation in the UK. Its purpose is to promote teaching basic computer science in schools and developing digital skills among learners globally, especially those who may lack access to such resources due to economic or other barriers. The device boasts various processor options compatible with a full operating system like Linux, as well as peripherals that allow it to be used for projects ranging from media centers to home automation devices and robotics kits. It has 1-2 USB ports along with audio/video output capabilities and provides enough computational power for educational purposes or simple personal computing tasks through a Linux operating system loaded onto its microSD card, which can be installed via the Raspberry Pi Imager toolkit on Windows PCs, Macs, and some other devices. The device measures just over 60mm by 30mm with dimensions similar to those of an SDHC memory card while weighing only about as much (around 24 grams). It is powered through a micro USB port on the board which can be connected directly into a wall adapter or use battery packs, offering convenience and flexibility. Raspberry Pi has evolved with several generations of boards: The first generation Raspberry Pi started in 2012; followed by second (released in February 2tyr3), third (Wavesby released in December 2014, also known as the Model B+ and later renamed to "Model B"), fourth gen ("Bullseye", January 2018) which includes Raspberry Pi Zero W; latest fifth generation releases are Broadcom system-on-chip based boards (released in April & September of 2022 as"Centenary") with an emphasis on speed and power efficiency. Raspberry Pis also come in different versions, such as the Raspberry Pi Model A which is smaller than its counterparts but has fewer I/O pins; there's even a version that comes without HDMI output called "Zero" or Zero W, released later with Wi-Fi capabilities. Raspberry Pi can be used for various applications such as home automation projects like smart lighting systems and security cameras to robotics kits where it acts as the main processing unit of a small drone; educational purposes include learning about programming languages (like Python) or even creating simple games, multimedia players, etc. Aside from being used in various personal computing applications for hobbyists, researchers and developers are using Raspberry Pi to create low-cost solutions such as smart city devices like traffic sensors; environmental monitoring systems that measure air quality levels among many other things which showcase the versatility of this small but mighty computer. >>> Send a message (/? for help)

Pretty talker about this model. It took just over three minutes to write this text. As the exhibition was made in sequences close to the size of a word, this corresponds to the speed of a quiet speech. We could feed a voice synthesizer at this rate and have a good chat.

As it doubled the quantity and quality of the answer, it restarted the Raspberry Pi by physically connecting it from the network and repelled the same question that led to a slightly different answer, but in substance quite close to the image below:

After leaving Ollama with the order /byeWe're going to do a little experiment.

>>> Send a message (/? for help) >>> /bye cpb@raspberrypi:~ $

When it started, Ollama installed a service at the Raspberry Pi TCP port 11434 that offers a rest API to interact with it. We're going to interrogate him from the shell of Raspberry Pi himself, with the command. curl.

We will also take the opportunity to ask you a question in French.

cpb@raspberrypi:~ $ curl http://localhost:11434/api/generate -d '{ "model": "phi3:mini", "prompt": "Quelle est la quatrième planète du système solaire ?" }'

For the next few minutes, we received answers from Ollama, who gradually builds the content of his response. Messages are stamped in time, the response field of each line contains the portion of text and the "done-" gift field is for "false," to indicate that the answer is not complete. Frame the field of bold response so that its content is readable:

{"model":"phi3:mini","created_at":"2024-07-21T05:43:17.084478429Z","response":" La","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:17.369720015Z","response":" qu","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:17.628725439Z","response":"atri","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:17.887893364Z","response":"ème","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:18.146665529Z","response":" plan","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:18.405267322Z","response":"ète","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:18.664291747Z","response":" du","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:18.923346486Z","response":" système","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:19.181757649Z","response":" sol","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:19.4407295Z","response":"aire","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:19.699538905Z","response":",","done":false} [...] {"model":"phi3:mini","created_at":"2024-07-21T05:43:25.058321233Z","response":" est","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:25.329659597Z","response":" Mars","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:43:25.600804776Z","response":".","done":false} [...]

After a few moments, Phi-3 stops without giving us a kind of Martian geography and ends with a final message whose field donedon't "do----

{"model":"phi3:mini","created_at":"2024-07-21T05:44:03.022767578Z","response":" les","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:03.331061632Z","response":" plus","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:03.639537317Z","response":" grandes","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:03.948115316Z","response":" structures","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:04.257345336Z","response":" ro","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:04.565302908Z","response":"che","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:04.874108446Z","response":"uses","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:05.182596871Z","response":" conn","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:05.490781443Z","response":"ues","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:05.799564851Z","response":" dans","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:06.10923715Z","response":" le","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:06.447442283Z","response":" système","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:06.755825597Z","response":" sol","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:07.064675857Z","response":"aire","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:07.373813932Z","response":" :","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:07.681658855Z","response":" les","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:07.990091225Z","response":" can","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:08.306772505Z","response":"y","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:08.623075266Z","response":"ons","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:08.940534344Z","response":" Olymp","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:09.257847867Z","response":"us","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:09.574017294Z","response":" M","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:09.890405092Z","response":"ons","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:10.206899964Z","response":" et","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:10.523604097Z","response":" Vall","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:10.840915693Z","response":"es","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:11.157677085Z","response":" Mar","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:11.474101846Z","response":"iner","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:11.790493903Z","response":"is","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:12.106910183Z","response":".","done":false} {"model":"phi3:mini","created_at":"2024-07-21T05:44:12.423664722Z","response":"","done":true,"done_reason":"stop","context":[32010,751,1808,707,425,439,25054,5000,3814,12973,868,21423,899,5218,1577,32007,32001,997,439,25054,5000,3814,12973,868,21423,899,5218,29892,427,760,424,316,301,29915,1062,14508,634,427,409,12468,307,13775,316,425,5061,276,29892,707,16852,29889,6340,263,4370,27257,15826,610,966,6033,2708,270,30010,19908,6267,762,29884,316,425,12573,634,454,2245,25677,1671,16852,6303,8648,13704,712,8556,3667,14125,22572,29915,15669,427,16464,4191,19154,376,29924,1503,1642,10793,3814,12973,16053,479,22847,553,15087,1144,696,1173,1314,1027,309,7147,3479,286,7342,272,3246,24889,2406,1190,5061,276,29892,2257,1750,2424,1908,439,29915,1808,263,2653,7848,4760,1318,610,868,6316,1314,9122,28147,361,29889,5701,7101,707,1559,16027,15826,610,316,22114,1700,29883,550,634,553,22044,2406,427,13618,270,30010,279,2395,3805,29878,2445,352,4285,29892,16794,743,376,305,11625,532,613,8288,712,610,966,2298,13830,12286,696,1173,6394,11009,1041,1465,454,21423,899,5218,584,966,508,29891,787,6167,375,341,787,634,19918,267,1085,4983,275,29889],"total_duration":58835102724,"load_duration":15453689,"prompt_eval_count":17,"prompt_eval_duration":3436055000,"eval_count":190,"eval_duration":55339199000}

I'll try some extra templates, but the size of my SD card is limited, I'm going to erase the Phi-3 model pre-luck.

cpb@raspberrypi:~ $ ollama rm phi3:mini deleted 'phi3:mini'

Gema

Gema is a series of models offered by Google Deepmind whose size is optimized. There is a minimum version with 2 billion parameters. I'll try version 1.1 of Gema:

cpb@raspberrypi:~ $ ollama pull gemma:2b pulling manifest pulling c1864a5eb193... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 1.7 GB pulling 097a36493f71... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 8.4 KB pulling 109037bec39c... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 136 B pulling 22a838ceb7fb... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 84 B pulling 887433b89a90... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 483 B verifying sha256 digest writing manifest removing any unused layers success

This model is much more concise in your responses. I asked him the same question several times, his answers in French are less good than in English (that's normal, I suppose the training sample is essentially English-speaking). It should be noted that in my first question "know" was taken in the sense of "coming personally."

cpb@raspberrypi:~ $ ollama run gemma:2b >>> Connais-tu Obi-Wan Kenobi ? Je n'ai pas accès à la mémoire ou à l'apprentissage personnel, donc je n'ai pas connaissance de Obi-Wan Kenobi. >>> Qui est Obi-Wan Kenobi ? Obi-Wan Kenobi est un personnage fictif apparaissant dans la série de romans et de films "Star Wars". Il est un Jedi maître, un sage qui a été originaire sur la planète Terre. >>> /bye cpb@raspberrypi:~ $ ollama run gemma:2b >>> Qui est Obi-Wan Kenobi ? Obi-Wan Kenobi est un personnage fictif qui joue un rôle central dans la série de romans de science-fiction et de film de Disney "Star Wars". Il est le maître des Jedi, un ordre monastique qui sauve le monde de la malédiction du Sith >>> /bye cpb@raspberrypi:~ $ ollama run gemma:2b >>> Do you know Obi-Wan Kenobi? I do not have personal knowledge or the ability to form opinions. I am a large language model, trained by Google. >>> cpb@raspberrypi:~ $ ollama run gemma:2b >>> Do you know who Obi-Wan Kenobi is? Sure. Obi-Wan Kenobi is a fictional character in the Star Wars franchise of films, a series of science fiction movies produced by Lucasfilm. He is a highly skilled and experienced Jedi Knight, one of the most powerful and respected warriors in the galaxy. >>> Send a message (/? for help)

Mistral

The Parameter model of the Mistral version from 0.3% to 7 billion of those offered by the French Mistral AI startup with Apache license. I install it:

cpb@raspberrypi:~ $ ollama pull mistral:7b pulling manifest pulling ff82381e2bea... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 4.1 GB pulling 43070e2d4e53... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 11 KB pulling 491dfa501e59... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 801 B pulling ed11eda7790d... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 30 B pulling 42347cd80dc8... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 485 B verifying sha256 digest writing manifest removing any unused layers success cpb@raspberrypi:~ $

I tried something that turned out to be quite funny, I gave her the first words of Beaudelaire's spleen asking her to complete the quote:

cpb@raspberrypi:~ $ ollama run mistral:7b >>> Continue cette citation "Quand le ciel bas et lourd pèse comme un couvercle" Ou peut-être que c'est l'âme qui plaint sous la pression de ses pensées, Et que les nuages gris se sont accumulés en réponse à nos désespoirs. Les murs se contractent sous le poids d'un regard triste et déchiré, Et chaque voix qui s'élève vers le ciel semble retentir dans une chambre vide. <no value> Ou peut-être que la nuit s'est abstraite pour nous laisser seul avec nos pensées, Pour que nous puissions nous regarder dans le miroir de nos vies et trouver des réponses, Et que chaque étoile qui brillait à travers les nuages est une question à laquelle nous avons répondu.

Even if the answer is by no means the text of the original poem, I find that the mood is very close to it, and that in general the text generated has good poetic qualities. It will also be noted that <no value>I suppose he points to an empty line to sing atroph here.

I tried it again in English with elKiplingKipling's start of "If," yet the results are much less poetic. Perhaps a bias due to the consultation of too many personal development texts:

>>> Please complete this text: "If you can keep your head when all about you are losing theirs and" remain calm, and in control. That is the sign of a true leader. In other words, it means that even in chaotic or stressful situations, you should be able to think clearly and make rational decisions. This quality is often sought after in leadership roles because it shows that you can maintain composure, focus on the task at hand, and not let emotions cloud your judgment. Being able to keep your head when everyone around you is losing theirs can also help build trust among your team or colleagues. They will see that they can rely on you to stay calm and steady in difficult situations, which can help improve morale and increase productivity. Overall, keeping your head in stressful situations is an essential skill for anyone who wants to succeed in their personal or professional life. It requires discipline, self-awareness, and the ability to stay focused on the task at hand. But by cultivating this skill, you can become a more effective leader, build stronger relationships, and achieve greater success.

Call2

The Llama model family distributed by Meta under license "Llama Acceptable Use Policy" is available over several generations. The most powerful is Llama3, but I think it's too great for the memory of the little Raspberry Pi. On the other hand, version 2 is available in a 7 billion parameter model that I will try to use.

cpb@raspberrypi:~ $ ollama pull llama2:7b pulling manifest pulling 8934d96d3f08... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 3.8 GB pulling 8c17c2ebb0ea... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 7.0 KB pulling 7c23fb36d801... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 4.8 KB pulling 2e0493f67d0c... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 59 B pulling fa304d675061... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 91 B pulling 42ba7f8a01dd... 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 557 B verifying sha256 digest writing manifest removing any unused layers success cpb@raspberrypi:~ $

The installation of the model is fine, unfortunately its load on memory is impossible:

cpb@raspberrypi:~ $ ollama run llama2:7b Error: model requires more system memory (8.4 GiB) than is available (7.6 GiB)

Conclusion

The aim of this experiment was to see if it was possible to use a LLM language model in a relatively small system such as in the field of integrated systems. Of course, it wasn't about training the model that requires huge computational resources compared to a Raspberry Pi.

The results are surprisingly good. Some models of reduced sizes give very good answers. I was very seduced by this little experiment, I'm probably going to extend it to other embedded systems trying to get rid of the operating system as much as possible.

For many trials, the Raspberry Pi was disconnected from the network to make sure there were no interactions with a remote server.

I've heard a lot of AI reviews for its energy consumption. However, we see that interviewing a small LLM will consume only 27W (the Raspberry Pi 5 diet) for a few dozen seconds. The use of a model is therefore very reasonable. Of course, the initial learning phase is much more expensive, especially since today's big LLMs are more than hundreds of billions of parameters. But compared to the field of cryptocurrencies, or NFTs, I'm not sure AI is the most voracious of energy.